Building Scalable AI Agents: Infrastructure, Planning, and Security

The key building blocks of AI agents—planning, tool integration, and memory—demand sophisticated infrastructure to function effectively in production environments. As the technology advances, several critical components have emerged as essential for successful deployments.

Development Frameworks & Architecture

The ecosystem for AI agent development has matured, with several key frameworks leading the way:

- AutoGen (Microsoft) – Excels in flexible tool integration and multi-agent orchestration.

- CrewAI – Specializes in role-based collaboration and team simulation.

- LangGraph – Provides robust workflow definition and state management.

- LlamaIndex – Optimizes knowledge integration and retrieval patterns.

While these frameworks offer unique features, successful agents typically share three core architectural components:

- Memory System – Retains context and learns from past interactions.

- Planning System – Breaks down complex tasks into structured steps with built-in validation.

- Tool Integration – Accesses specialized capabilities via APIs and function calling.

Despite these strong foundations, production deployments often require customization to address high-scale workloads, security requirements, and system integrations.

Planning & Execution

Handling complex tasks requires advanced planning and execution flows, typically structured around:

- Plan Generation – Breaking tasks into manageable steps.

- Plan Validation – Evaluating steps before execution to optimize compute efficiency.

- Execution Monitoring – Tracking progress and handling failures in real time.

- Reflection & Adaptation – Evaluating outcomes and adjusting strategies dynamically.

An agent’s effectiveness hinges on its ability to:

✅ Generate structured plans by intelligently combining tools and knowledge (e.g., correctly sequencing API calls for a customer refund request).

✅ Validate each task step to prevent errors from compounding.

✅ Optimize computational costs in long-running operations.

✅ Recover from failures through dynamic replanning.

✅ Apply multiple validation strategies, from structural verification to runtime testing.

✅ Collaborate with other agents when consensus-based decisions improve accuracy.

While multi-agent consensus models improve accuracy, they are computationally expensive. Even OpenAI finds that running parallel model instances for consensus-based responses remains cost-prohibitive, with ChatGPT Pro priced at $200/month. Running majority-vote systems for complex tasks can triple or quintuple costs, making single-agent architectures with robust planning and validation more viable for production use.

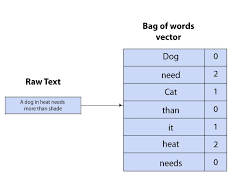

Memory & Retrieval

AI agents require advanced memory management to maintain context and learn from experience. Memory systems typically include:

1. Context Window

- The immediate processing capacity of an AI model (analogous to RAM).

- Recent advancements have extended this to 1M+ tokens, enabling richer single-interaction contexts.

2. Working Memory (State Maintained During a Task)

- Active Goals – Tracks ongoing objectives and subtasks.

- Intermediate Results – Stores calculations and outputs.

- Task Status – Maintains execution progress.

- State Verification – Tracks validated facts and corrections.

Key context management techniques:

- Context Optimization – Efficiently prioritizing essential information.

- Memory Management – Moving data dynamically between short-term and long-term storage.

3. Long-Term Memory & Knowledge Management

AI agents rely on structured storage systems for persistent knowledge:

- Knowledge Graphs (e.g., Neo4j, Zep) – Representing entities and relationships.

- Virtual Memory (e.g., Letta/MemGPT) – Paging between short-term and external storage.

Advanced Memory Capabilities

- Compound Task Handling – Maintaining accuracy across multi-step workflows.

- Continuous Learning – Automatically constructing knowledge graphs from interactions.

- Memory Optimization – Managing context efficiently to reduce hallucinations and API costs.

Standardization efforts like Anthropic’s Model Context Protocol (MCP) are emerging to streamline memory integration, but challenges remain in balancing computational efficiency, consistency, and real-time retrieval.

Security & Execution

As AI agents gain autonomy, security and auditability become critical. Production deployments require multiple layers of protection:

1. Tool Access Control

- Restricting what operations agents can perform.

2. Execution Validation

- Verifying generated plans before execution.

3. Secure Execution Environments

- Platforms like e2b.dev and CodeSandbox provide sandboxed environments to safely run AI-generated code.

4. API Governance & Access Control

- Implementing granular permissions to limit agent access.

5. Monitoring & Observability

- Platforms like LangSmith and AgentOps track:

- Error detection

- Resource utilization

- Performance metrics

6. Audit Trails

- Maintaining detailed logs of agent decision-making and system interactions.

These security measures must balance flexibility, reliability, and operational control to ensure trustworthy AI-driven automation.

Conclusion

Building production-ready AI agents requires a carefully designed infrastructure that balances:

✅ Advanced memory systems for context retention.

✅ Sophisticated planning capabilities to break down tasks.

✅ Secure execution environments with strong access controls.

While AI agents offer immense potential, their adoption remains experimental across industries. Organizations must strategically evaluate where AI agents justify their complexity, ensuring that they provide clear, measurable benefits over traditional AI models.