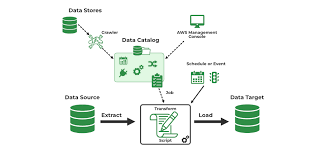

The rapid rise of Software as a Service (SaaS) solutions has led to data silos across different platforms, making it challenging to consolidate insights. Effective data analytics depends on the ability to seamlessly integrate data from various systems by identifying, gathering, cleansing, and combining it into a unified format. AWS Glue, a serverless data integration service, simplifies this process with scalable, efficient, and cost-effective solutions for unifying data from multiple sources. By using AWS Glue, organizations can streamline data integration, minimize silos, and enhance agility in managing data pipelines, unlocking the full potential of their data for analytics, decision-making, and innovation.

This insight explores the new Salesforce connector for AWS Glue and demonstrates how to build a modern Extract, Transform, and Load (ETL) pipeline using AWS Glue ETL scripts.

Introducing the Salesforce Connector for AWS Glue

To meet diverse data integration needs, AWS Glue now supports SaaS connectivity for Salesforce. This enables users to quickly preview, transfer, and query customer relationship management (CRM) data, while dynamically fetching the schema. With the Salesforce connector, users can ingest and transform CRM data and load it into any AWS Glue-supported destination, such as Amazon S3, in preferred formats like Apache Iceberg, Apache Hudi, and Delta Lake. It also supports reverse ETL use cases, enabling data to be written back to Salesforce.

Key Benefits:

- Seamlessly integrates with AWS Glue’s native capabilities and is production-ready for any data integration workload.

- Works on top of AWS Glue and Apache Spark, offering efficient, distributed data processing.

Solution Overview

For this use case, we retrieve the full load of a Salesforce account object into a data lake on Amazon S3 and capture incremental changes. The solution also enables updates to certain fields in the data lake and synchronizes them back to Salesforce.

The process involves creating two ETL jobs using AWS Glue with the Salesforce connector. The first job ingests the Salesforce account object into an Apache Iceberg-format data lake on Amazon S3. The second job captures updates and pushes them back to Salesforce.

Prerequisites:

- Create an S3 bucket for storage.

- Sign up for a Salesforce account.

- Set up an AWS Identity and Access Management (IAM) role with policies for AWS Glue to access resources like S3 and AWS Secrets Manager.

- Configure the Salesforce connection in AWS Glue using OAuth2 and Secrets Manager.

Creating the ETL Pipeline

Step 1: Ingest Salesforce Account Object

Using the AWS Glue console, create a new job to transfer the Salesforce account object into an Apache Iceberg-format transactional data lake in Amazon S3. The script checks if the account table exists, performs an upsert if it does, or creates a new table if not.

Step 2: Push Changes Back to Salesforce

Create a second ETL job to update Salesforce with changes made in the data lake. This job writes the updated account records from Amazon S3 back to Salesforce.

Example Query

sqlCopy codeSELECT id, name, type, active__c, upsellopportunity__c, lastmodifieddate

FROM "glue_etl_salesforce_db"."account";

Additional Considerations

You can schedule the ETL jobs using AWS Glue job triggers or integrate them with other AWS services like AWS Lambda and Amazon EventBridge for advanced workflows. Additionally, AWS Glue supports importing deleted Salesforce records by configuring the IMPORT_DELETED_RECORDS option.

Clean Up

After completing the process, clean up the resources used in AWS Glue, including jobs, connections, Secrets Manager secrets, IAM roles, and the S3 bucket to avoid incurring unnecessary charges.

Conclusion

The AWS Glue connector for Salesforce simplifies the analytics pipeline, accelerates insights, and supports data-driven decision-making. Its serverless architecture eliminates the need for infrastructure management, offering a cost-effective and agile approach to data integration, empowering organizations to efficiently meet their analytics needs.