The EU AI Act is a complex piece of legislation, packed with various sections, definitions, and guidelines, making it challenging for organizations to navigate. However, understanding the EU AI Act is crucial for companies aiming to innovate with AI while staying compliant with both legal and ethical standards.

Arnoud Engelfriet, chief knowledge officer at ICTRecht, an Amsterdam-based legal services firm, specializes in IT, privacy, security, and data law. As the head of ICTRecht Academy, he is responsible for educating others on AI legislation, including the AI Act.

In his book AI and Algorithms: Mastering Legal and Ethical Compliance, published by Technics, Engelfriet explores the intersection of AI legislation and ethical AI development, using the AI Act as a key example. He emphasizes that while new AI guidelines can raise concerns about creativity and compliance, it’s quite necessary for organizations to grasp the current and future legal landscape to build trustworthy AI systems.

Balancing Compliance and Innovation

As of August 2024, the much-anticipated AI Act is in effect, with implementation timelines extending from six months to over a year. Many businesses worry that the regulations might slow down AI innovation, especially given the rapid pace of technological advancements.

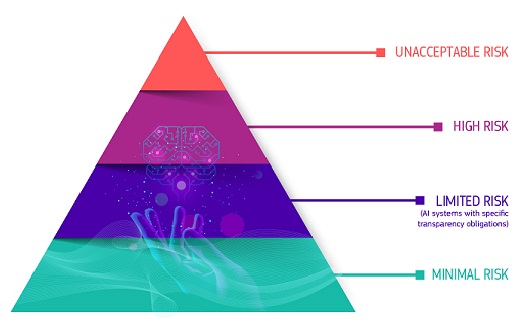

Engelfriet acknowledges this tension, noting that “compliance and innovation have always been somewhat at odds.” However, he believes the act’s flexible, tiered approach offers space for businesses to adapt. For instance, the inclusion of regulatory sandboxes allows companies to test AI systems safely, without releasing them into the market. Engelfriet suggests that while innovation might slow down, the safety and trustworthiness of AI systems will improve.

Ensuring Trustworthy AI

The AI Act aims to promote “trustworthy AI,” a term that became central to discussions after its inclusion in the first draft of the act in 2019. Although the concept remains somewhat undefined, the act outlines three key characteristics of trustworthy AI: legality, technical robustness, and ethical soundness.

Engelfriet underscores that trust in AI systems is ultimately about trusting the humans behind them. “You cannot really trust a machine,” he explained, “but you can trust its designers and operators.” The AI Act requires transparency around how AI systems function, ensuring they reliably perform their intended tasks, such as making automated decisions or serving as chatbots.

Ethics has gained even more prominence with the rise of generative AI. Engelfriet highlights the fragmented nature of AI ethics guidelines, which address everything from data protection to bias prevention. The EU’s Assessment List for Trustworthy AI provides a framework to guide organizations in applying ethical standards, though Engelfriet notes that it may need to be tailored to specific industry needs.

The Role of AI Compliance Officers

Given the complexity of AI regulations, organizations may find it overwhelming to manage compliance efforts. To meet this growing need, Engelfriet recommends appointing AI compliance officers to help companies integrate AI responsibly into their operations.

ICTRecht has also developed a course, based on AI and Algorithms, to teach employees how to navigate AI compliance. Participants from various roles—particularly those in data, privacy, and risk functions—attend the course to expand their knowledge in this increasingly important area.

Salesforce is developing Trailblazer content to address these challenges as well.

As with GDPR, Engelfriet believes the AI Act will set the tone for future AI regulations. He advises businesses to proactively engage with the AI Act to ensure they are prepared for the evolving regulatory landscape.

To get assistance exploring your EU risks, contact Tectonic today.