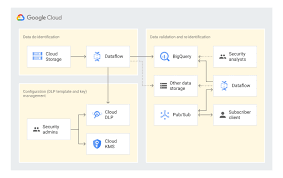

Using Google Cloud Data Loss Prevention with Salesforce for Sensitive Data Handling

This insight discusses the transition from detecting and classifying sensitive data to preventing data loss using Google Cloud Data Loss Prevention (DLP). Sensitive Information De-identification for Salesforce is used as the data source to demonstrate how personal, health, credential, and financial information can be de-identified in unstructured data in near real-time.

Overview of Google Cloud DLP

Google Cloud DLP is a fully managed service designed to help discover, classify, and protect sensitive data. It easily transitions from detection to prevention by offering services that mask sensitive information and measure re-identification risk.

Objective

The goal was to demonstrate the ability to redact sensitive information in unstructured data at scale. Specifically, it aimed to determine whether sensitive data, such as credit card numbers, tax file numbers, and health care numbers, entered into Salesforce communications (Emails, Files, and Chatter) could be detected and redacted.

Constraints Tested

- Redact passwords, cloud API keys, health card, and credit card numbers

- Redact sensitive information in text and images in near real-time

- Process 100 records/min and provide scalable storage

- Maintain a redundant design for unexpected outages

- Work with data sources beyond Salesforce

De-identifying Data with Google Cloud DLP API

Instead of detailing the setup, this section focuses on the key areas of design.

Google Design Decisions

Supporting Disparate Data Sources with Multiple Integration Patterns and Redundant Design

- Integration Patterns

- Real-time: An event-driven pattern operating asynchronously to support near real-time use cases. This scales without impacting user experience.

- Batch: Supports applications that do not require real-time support, handling outages in real-time processing by polling unprocessed records. It can delete records from Salesforce after archiving them into BigQuery, preventing data growth, and retrospectively de-identifying data if rules change.

- Storage

- BigQuery is used to house all de-identified audit data, running Google Cloud DLP as a native tool and scaling to multiple data sources.

- Services

- Each application needing data de-identification will have two microservices: one hosted on Google App Engine for inbound events and one as a Cloud Function scheduled to run recurrently. Development teams can choose programming languages, temporary storage, and deployment models. This allows selecting the appropriate de-identification tool and data types.

Salesforce Data Source

De-identification targets include email addresses, Australian Medicare card numbers, GCP API keys, passwords, and credit card numbers. Credit card numbers are masked with asterisks, while other sensitive data is replaced with information types for readability (e.g., jane@secretemail.com becomes [redacted-email-address]).

Sample Requests to Google De-identification Service

JSON Structure to De-identify Text Using Google Cloud DLP API

jsonCopy code{

// JSON structure

}

JSON Structure to De-identify Images Using Google Cloud DLP API

jsonCopy code{

// JSON structure

}

Salesforce Design Decisions

- Data Sources, DLP Processing, and Storage

- The solution should cover common use cases where customers may input sensitive data. The data sources include ContentVersion, FeedItem, FeedComment, LiveChatTranscript, EmailMessage, and text fields in standard and custom objects.

- Streaming Updates from Salesforce

- Options for Streaming Updates: The chosen option was an event-driven integration pattern with an Apex trigger framework to preprocess data.

- Custom Object (DLP_Audit__c): A central custom object to preprocess data for consistent shape and stream updates.

- Other Integration Options

- Synchronous Apex: Not recommended due to potential governor limits and performance issues.

- Asynchronous Apex: Mitigates governor limits but may result in delays and out-of-order execution.

Redundancy and Batch Processing

A scheduled batch job allows for recovery by polling unprocessed records. To handle large data volumes (e.g., 360,000 records over 5 days), the Salesforce BULK API is used to process queries and updates in large batch sizes, reducing the number of API calls.

Sensitive Information De-identification

Google Cloud Data Loss Prevention allows detecting and protecting assets with sensitive information, supporting a wide range of use cases across an enterprise.

Proven Capabilities:

- Redacting free text in chatter posts

- Redacting inbound emails

- Redacting values in fields in standard and custom objects

- Redacting images (JPEG)

Considerations and Lessons Learned

Enhanced Email: Redacting tasks and EmailMessage records, handling read-only EmailMessage records by deleting and recreating them.

Files: The architecture assumes files with sensitive data can be deleted and replaced with redacted versions.

Audit Fields: Ensure setting CreatedDate and LastModifiedDate fields using original record dates.

Field History Tracking: Avoid tracking fields intended for de-identification, tracking shadow fields instead.

Image De-identification: Limited to JPEG, BMP, and PNG formats, with DOCX and PDF not yet supported.