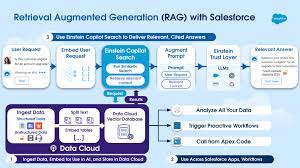

How Data Cloud Vector Databases Work

1. Ingest Unstructured Data in Data Cloud

With the help of a new, unstructured data pipeline, relevant unstructured data for case deflection, such as product manuals or upgrade eligibility knowledge articles, can be ingested in Data Cloud and stored as unstructured data model objects.

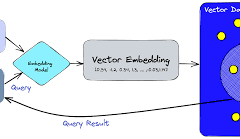

2. Chunk and Transform Data for Use in AI

In Data Cloud, teams will then be able to select the data that they want to use in processes like search, chunking this data into small segments before converting it into embeddings – numeric representations of data optimized for use in AI algorithms.

This is done through the Einstein Trust Layer, which securely calls a special type of LLM called an “embedding model” to create the embeddings. It is then indexed for use in search across the Einstein 1 platform alongside structured data. How Data Cloud Vector Databases Work.

3. Store Embeddings in Data Cloud Vector Database

In addition to supporting chunking and indexing of data, Data Cloud now natively supports storage of embeddings – a concept called “vector storage”. This frees up time for teams to innovate with AI instead of managing and securing an integration to an external vector database.

4. Analyze and Act on Unstructured Data

Use familiar platform tools like Flow, Apex, and Tableau to use unstructured data, such as clustering customer feedback by semantic similarity and creating automations that alert teams when sentiment changes significantly.

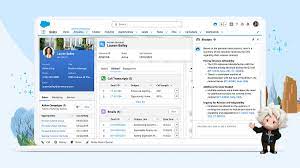

5. Deploy AI Search in Einstein Copilot to Deflect Cases

With relevant data, such as knowledge articles, securely embedded and stored in Data Cloud’s vector database, this data can also be activated for use in Einstein AI Search within Einstein Copilot. When a customer visits a self-service portal and asks for details on how to return a product, for example, the Einstein Copilot performs semantic search by converting the user query into an embedding, after which it compares that query to the embedded data in Data Cloud, retrieving the most semantically relevant information for use in its answer while citing the sources it pulled from.

The end result is AI-powered search capable of understanding the intent behind a question and retrieving not just article links but exact passages that best answer the question, all of which are summarized through a customer’s preferred LLM into a concise, actionable answer – boosting customer satisfaction while deflecting cases.