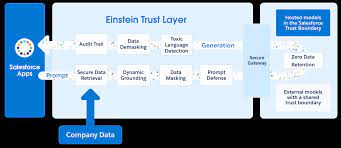

The Einstein Trust Layer is a secure AI architecture. It is natively built into the Salesforce Platform. Designed for enterprise security standards the Einstein Trust Layer continues to allow teams to benefit from generative AI. Without compromising their customer data, while at the same time letting companies use their trusted data to improve generative AI responses:

- Integrated and grounded: Built into every Einstein Copilot by default, the Trust Layer grounds and enriches generative prompts in trusted company data through an integration with Salesforce Data Cloud.

- Zero-data retention and PII protection: Companies can be confident their data will never be retained by third-party LLM providers. Providing customer personal identifiable information (PII) masking delivers added data privacy.

- Toxicity awareness and compliance-ready AI monitoring: A safety-detector LLM will guard against toxicity and risks to brands by “scoring” AI generations. Thereby providing confidence that responses are safe. Additionally, every AI interaction will be captured in a secure, monitored audit trail, giving companies visibility and control of how their data is being used.

Trusted AI starts with securely grounded prompts.

A prompt is a canvas to provide detailed context and instructions to Large Language Models. The Einstein Trust layer allows you to responsibly ground all of your prompts in customer data and mask that data when the prompt is shared with Large Language Models*. With our Zero Retention architecture, none of your data is stored outside of Salesforce.

Salesforce gives customers control over the use of their data for AI. Whether using our own Salesforce-hosted models or external models that are part of our Shared Trust Boundary, like OpenAI, no context is stored. The large language model forgets both the prompt and the output as soon as the output is processed.

🔔🔔 Follow us on LinkedIn 🔔🔔