Best Practices for Building a RAG LLM Chatbot

In today’s competitive landscape, a robust Retrieval-Augmented Generation (RAG) Large Language Model (LLM) chatbot can provide a significant edge. However, implementing such a system involves multiple steps and challenges. Below is a guide to help you navigate this journey effectively.

1. Define Clear Objectives

Start by identifying your goals:

- Are you enhancing your search interface with semantic capabilities?

- Do you want to integrate domain-specific knowledge?

- Is your focus on creating a customer-facing chatbot or exposing internal APIs?

A clear purpose will drive your design and implementation decisions.

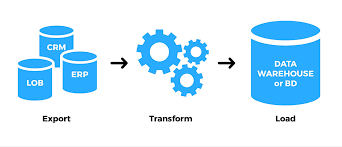

2. Prepare Your Data

Your chatbot’s success relies heavily on the quality and structure of your data.

Assess Your Data Format:

- Structured Data: Convert data in formats like CSV or JSON into text for easier indexing with vector databases (tools like LangChain can help).

- Tabular Data: Data with rows and columns might require transformation or enrichment to support complex queries.

- Textual Data: Documents, articles, or logs often suit vector processing but may need filtering or reorganization.

Enrich Your Data:

- Add contextual information by integrating external sources like knowledge bases.

- Annotate data to label key entities or relationships, improving your model’s accuracy.

3. Select the Right Platform

Choose a platform based on your goals and data type. Common architectures include:

Standard RAG with Vector Databases:

- Ideal for text-based data, allowing semantic search using databases like Pinecone, Weaviate, or ElasticSearch.

- Key considerations:

- Optimize chunk sizes for balance between metadata management and query performance.

- Partition data into collections for better results.

- Plan for scalability with frequent database updates.

Relational Databases:

- Use database schemas in your LLM prompts to convert user requests into SQL queries (e.g., Text-to-SQL Patterns).

Hybrid Text Search:

- Combine text search (e.g., Elasticsearch) with semantic search for greater flexibility.

Graph Databases:

- Create knowledge graphs to capture relationships between entities, enabling advanced retrieval capabilities.

4. Fine-Tune the Model

While RAG often enriches data without altering the base model, fine-tuning may be essential in specific scenarios, such as:

- Teaching the model industry-specific terminology.

- Ensuring consistent formatting for legal or technical documents.

You can fine-tune open-source models directly or use OpenAI’s APIs for custom training. OpenAI also offers domain-specific GPTs, which can save development time.

5. Master Prompt Engineering

Crafting precise prompts is critical to guide LLM responses. Prompts generally include:

- Instruction: The task or objective.

- Context: External information to steer responses.

- Input Data: The query or user input.

- Output Indicator: Desired response format.

Example for Elasticsearch:

javascriptCopy codeYour task is to create a valid Elasticsearch DSL query.

Given the mapping: ```{mapping}```, translate the query: ```{query}``` into JSON.

- Use only fields from the mapping.

- Ensure case-insensitivity and support fuzzy matches.

- Compress the JSON output, removing spaces.

For advanced prompt strategies, see Modern Advances in Prompt Engineering.

6. Iterative Testing

Testing machine learning models is inherently complex. Key strategies:

- MVP Feedback Loop: Launch a minimum viable product (MVP) to gather user feedback and identify issues early.

- Testing Frameworks: Use tools like

deelevalto create unit tests for RAG and LLM applications. - Performance Tracking: Maintain test metrics alongside dataset versions to monitor improvements.

7. Optimize the Front-End

Collaborate with UI/UX teams to integrate LLM capabilities seamlessly. Consider:

- Response Time Management: Display progress indicators during longer LLM response times.

- User Query Refinement: Allow users to confirm intent before running complex queries to reduce errors.

- POC Front-End Tools: Use frameworks like Chainlit for quick prototypes or Streamlit for customizable interfaces.

8. Avoid Common Pitfalls

- Data Quality Issues:

- Regularly update datasets.

- Rerun integration tests after updates to assess performance changes.

- Neglecting Security:

- Address risks like query injection early. Example: Restrict prompts to prevent malicious commands from altering outputs.

- Lack of User Feedback:

- Early user testing is invaluable. Users often interact with applications in unpredictable ways, revealing critical usability issues.

- Scalability Challenges:

- Choose simpler models like GPT-3.5 for faster performance unless complexity demands GPT-4.

Looking Ahead

The rise of LLMs has transformed how we think about search and chatbot applications. While we’re in the early stages, the possibilities are immense. Businesses that adapt and strategically integrate these technologies will unlock innovative opportunities and thrive in the evolving landscape.