Throughout history, disruptive technologies have been the catalyst for major social and economic revolutions. The invention of the plow and irrigation systems 12,000 years ago sparked the Agricultural Revolution, while Johannes Gutenberg’s 15th-century printing press fueled the Protestant Reformation and helped propel Europe out of the Middle Ages into the Renaissance. In the 18th century, James Watt’s steam engine ushered in the Industrial Revolution. More recently, the internet has revolutionized communication, commerce, and information access, shrinking the world into a global village. Similarly, smartphones have transformed how people interact with their surroundings.

Now, we stand at the dawn of the AI revolution. Large Language Models (LLMs) represent a monumental leap forward, with significant economic implications at both macro and micro levels. These models are reshaping global markets, driving new forms of currency, and creating a novel economic landscape.

The reason LLMs are transforming industries and redefining economies is simple: they automate both routine and complex tasks that traditionally require human intelligence. They enhance decision-making processes, boost productivity, and facilitate cost reductions across various sectors. This enables organizations to allocate human resources toward more creative and strategic endeavors, resulting in the development of new products and services. From healthcare to finance to customer service, LLMs are creating new markets and driving AI-driven services like content generation and conversational assistants into the mainstream.

To truly grasp the engine driving this new global economy, it’s essential to understand the inner workings of this disruptive technology. These posts will provide both a macro-level overview of the economic forces at play and a deep dive into the technical mechanics of LLMs, equipping you with a comprehensive understanding of the revolution happening now.

Why Now? The Connection Between Language and Human Intelligence

AI did not begin with ChatGPT’s arrival in November 2022. Many people were developing machine learning classification models in 1999, and the roots of AI go back even further. Artificial Intelligence was formally born in 1950, when Alan Turing—considered the father of theoretical computer science and famed for cracking the Nazi Enigma code during World War II—created the first formal definition of intelligence. This definition, known as the Turing Test, demonstrated the potential for machines to exhibit human-like intelligence through natural language conversations. The test involves a human evaluator who engages in conversations with both a human and a machine. If the evaluator cannot reliably distinguish between the two, the machine is considered to have passed the test. Remarkably, after 72 years of gradual AI development, ChatGPT simulated this very interaction, passing the Turing Test and igniting the current AI explosion.

But why is language so closely tied to human intelligence, rather than, for example, vision? While 70% of our brain’s neurons are devoted to vision, OpenAI’s pioneering image generation model, DALL-E, did not trigger the same level of excitement as ChatGPT. The answer lies in the profound role language has played in human evolution.

The Evolution of Language

The development of language was the turning point in humanity’s rise to dominance on Earth. As Yuval Noah Harari points out in his book Sapiens: A Brief History of Humankind, it was the ability to gossip and discuss abstract concepts that set humans apart from other species. Complex communication, such as gossip, requires a shared, sophisticated language.

Human language evolved from primitive cave signs to structured alphabets, which, along with grammar rules, created languages capable of expressing thousands of words. In today’s digital age, language has further evolved with the inclusion of emojis, and now with the advent of GenAI, tokens have become the latest cornerstone in this progression. These shifts highlight the extraordinary journey of human language, from simple symbols to intricate digital representations.

In the next post, we will explore the intricacies of LLMs, focusing specifically on tokens. But before that, let’s delve into the economic forces shaping the LLM-driven world.

The Forces Shaping the LLM Economy

AI Giants in Competition

Karl Marx and Friedrich Engels argued that those who control the means of production hold power. The tech giants of today understand that AI is the future means of production, and the race to dominate the LLM market is well underway.

This competition is fierce, with industry leaders like OpenAI, Google, Microsoft, and Facebook battling for supremacy. New challengers such as Mistral (France), AI21 (Israel), and Elon Musk’s xAI and Anthropic are also entering the fray. The LLM industry is expanding exponentially, with billions of dollars of investment pouring in. For example, Anthropic has raised $4.5 billion from 43 investors, including major players like Amazon, Google, and Microsoft.

The Scarcity of GPUs

Just as Bitcoin mining requires vast computational resources, training LLMs demands immense computing power, driving a search for new energy sources. Microsoft’s recent investment in nuclear energy underscores this urgency.

At the heart of LLM technology are Graphics Processing Units (GPUs), essential for powering deep neural networks. These GPUs have become scarce and expensive, adding to the competitive tension.

- Manufacturing Constraints: Limited production capacity, semiconductor shortages, and the architectural limits of Moore’s Law contribute to this scarcity.

- Strategic Reserves: Larger companies secure vast quantities of GPUs to ensure uninterrupted AI operations, leaving smaller firms struggling to access this crucial resource.

- Alternative Solutions: Companies are developing alternative solutions, such as Google’s Tensor Processing Units (TPUs) or new AI chips from startups like Groq and SambaNova. These innovations aim to reduce reliance on traditional GPUs, while new model architectures, like speculative decoding and structured state space models (SSMs), seek to optimize existing resources.

Tokens: The New Currency of the LLM Economy

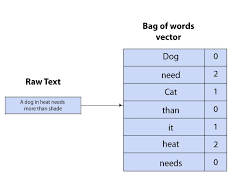

Tokens are the currency driving the emerging AI economy. Just as money facilitates transactions in traditional markets, tokens are the foundation of LLM economics. But what exactly are tokens?

Tokens are the basic units of text that LLMs process. They can be single characters, parts of words, or entire words. For example, the word “Oscar” might be split into two tokens, “os” and “car.” The performance of LLMs—quality, speed, and cost—hinges on how efficiently they generate these tokens.

LLM providers price their services based on token usage, with different rates for input (prompt) and output (completion) tokens. As companies rely more on LLMs, especially for complex tasks like agentic applications, token usage will significantly impact operational costs.

With fierce competition and the rise of open-source models like Llama-3.1, the cost of tokens is rapidly decreasing. For instance, OpenAI reduced its GPT-4 pricing by about 80% over the past year and a half. This trend enables companies to expand their portfolio of AI-powered products, further fueling the LLM economy.

Context Windows: Expanding Capabilities

Recently, a new metric—context window size—has emerged as a key factor in LLM performance. The context window refers to the length of the input prompt, and a larger window allows for more tokens to be processed. This offers several advantages:

- Bragging Rights: A larger context window showcases the strength of a model.

- New Functionalities: Larger windows enable new use cases, such as Q&A applications without retrieval-augmented generation (RAG).

- Technological Demand: Emerging technologies like Mamba, which support larger context windows, are addressing the limitations of transformer-based models.

- Increased Revenue: Larger context windows allow users to input more tokens, driving up revenue for LLM providers.

Summary

The disruptive power of LLMs has ignited a new social revolution, reshaping how humans interact with AI. As LLMs continue to evolve, a dynamic economy is emerging around them, fostering the development of new markets and capabilities that were previously unimaginable.

🔔🔔 Follow us on LinkedIn 🔔🔔