Salesforce has introduced advanced capabilities for unstructured data in Data Cloud and Einstein Copilot Search. By leveraging semantic search and prompts in Einstein Copilot, Large Language Models (LLMs) now generate more accurate, up-to-date, and transparent responses, ensuring the security of company data through the Einstein Trust Layer. Retrieval Augmented Generation in Artificial Intelligence has taken Salesforce’s Einstein and Data Cloud to new heights.

These features are supported by the AI framework called Retrieval Augmented Generation (RAG), allowing companies to enhance trust and relevance in generative AI using both structured and unstructured proprietary data.

RAG Defined: RAG assists companies in retrieving and utilizing their data, regardless of its location, to achieve superior AI outcomes. The RAG pattern coordinates queries and responses between a search engine and an LLM, specifically working on unstructured data such as emails, call transcripts, and knowledge articles.

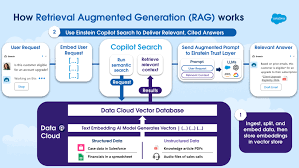

How RAG Works:

- Retrieval: RAG employs semantic search, such as Salesforce’s Einstein Copilot Search, to retrieve the most relevant data for constructing prompts.

- Augmented: LLMs don’t require upfront training on this data. Instead, relevant contextual data is automatically added to the prompt, enhancing its effectiveness.

- Generation: The augmented prompt is sent to the LLM, which generates a response using the most appropriate and recent data. The RAG pattern ensures users can access the AI model’s sources to verify accuracy.

Salesforce’s Implementation of RAG: RAG begins with Salesforce Data Cloud, expanding to support storage of unstructured data like PDFs and emails. A new unstructured data pipeline enables teams to select and utilize unstructured data across the Einstein 1 Platform. The Data Cloud Vector Database combines structured and unstructured data, facilitating efficient processing.

RAG in Action with Einstein Copilot Search:

- User requests are transformed into vector embeddings.

- Einstein Copilot Search runs a semantic search operation, comparing the numeric representation against unstructured data.

- Semantic and keyword search are combined for precise results.

- The augmented prompt includes the original user request and relevant contextual data.

- The prompt, passed through the Einstein Trust Layer, ensures LLMs generate trusted, relevant answers.

- Citations in the output highlight the data sources, promoting transparency.

RAG for Enterprise Use: RAG aids in processing internal documents securely. Its four-step process involves ingestion, natural language query, augmentation, and response generation. RAG prevents arbitrary answers, known as “hallucinations,” and ensures relevant, accurate responses.

Applications of RAG:

- Conversations with data repositories for new experiences.

- Medical assistants linked to a medical index.

- Financial analysts benefiting from market data.

- Knowledge bases enhancing LLMs for support, training, and productivity.

RAG offers a pragmatic and effective approach to using LLMs in the enterprise, combining internal or external knowledge bases to create a range of assistants that enhance employee and customer interactions.

Retrieval-augmented generation (RAG) is an AI technique for improving the quality of LLM-generated responses by including trusted sources of knowledge, outside of the original training set, to improve the accuracy of the LLM’s output. Implementing RAG in an LLM-based question answering system has benefits: 1) assurance that an LLM has access to the most current, reliable facts, 2) reduce hallucinations rates, and 3) provide source attribution to increase user trust in the output.

Retrieval Augmented Generation in Artificial Intelligence

Content updated July 2024.