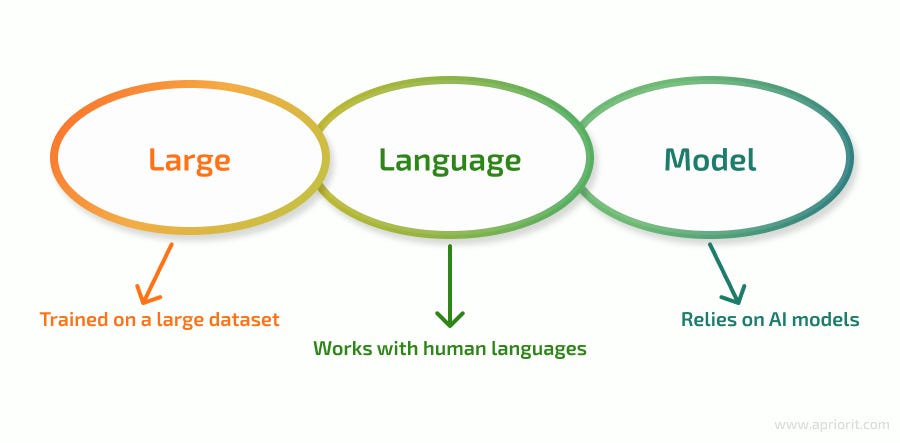

In 2023, Algo Communications, a Canadian company, faced a significant challenge. With rapid growth on the horizon, the company struggled to train customer service representatives (CSRs) quickly enough to keep pace. To address this, Algo turned to an innovative solution: generative AI. They needed to Adopt a Large Language Model.

Algo adopted a large language model (LLM) to accelerate the onboarding of new CSRs. However, to ensure CSRs could accurately and fluently respond to complex customer queries, Algo needed more than a generic, off-the-shelf LLM. These models, typically trained on public internet data, lack the specific business context required for accurate answers. This led Algo to use retrieval-augmented generation, or RAG.

Many people have already used generative AI models like OpenAI’s ChatGPT or Google’s Gemini (formerly Bard) for tasks like writing emails or crafting social media posts. However, achieving the best results can be challenging without mastering the art of crafting precise prompts.

An AI model is only as effective as the data it’s trained on. For optimal performance, it needs accurate, contextual information rather than generic data. Off-the-shelf LLMs often lack up-to-date, reliable access to your specific data and customer relationships. RAG addresses this by embedding the most current and relevant proprietary data directly into LLM prompts.

RAG isn’t limited to structured data like spreadsheets or relational databases. It can retrieve all types of data, including unstructured data such as emails, PDFs, chat logs, and social media posts, enhancing the AI’s output quality.

How RAG Works

RAG enables companies to retrieve and utilize data from various internal sources for improved AI results. By using your own trusted data, RAG reduces or eliminates hallucinations and incorrect outputs, ensuring responses are relevant and accurate. This process involves a specialized database called a vector database, which stores data in a numerical format suitable for AI and retrieves it when prompted.

“RAG can’t do its job without the vector database doing its job,” said Ryan Schellack, Director of AI Product Marketing at Salesforce. “The two go hand in hand. Supporting retrieval-augmented generation means supporting a vector store and a machine-learning search mechanism designed for that data.”

RAG, combined with a vector database, significantly enhances LLM outputs. However, users still need to understand the basics of crafting clear prompts.

Faster Responses to Complex Questions

In December 2023, Algo Communications began testing RAG with a few CSRs using a small sample of about 10% of its product base. They incorporated vast amounts of unstructured data, including chat logs and two years of email history, into their vector database. After about two months, CSRs became comfortable with the tool, leading to a wider rollout.

In just two months, Algo’s customer service team improved case resolution times by 67%, allowing them to handle new inquiries more efficiently.

“Exploring RAG helped us understand we could integrate much more data,” said Ryan Zoehner, Vice President of Commercial Operations at Algo Communications. “It enabled us to provide detailed, technically savvy responses, enhancing customer confidence.”

RAG now touches 60% of Algo’s products and continues to expand. The company is continually adding new chat logs and conversations to the database, further enriching the AI’s contextual understanding. This approach has halved onboarding time, supporting Algo’s rapid growth.

“RAG is making us more efficient,” Zoehner said. “It enhances job satisfaction and speeds up onboarding. Unlike other LLM efforts, RAG lets us maintain our brand identity and company ethos.”

RAG has also allowed Algo’s CSRs to focus more on personalizing customer interactions.

“It allows our team to ensure responses resonate well,” Zoehner said. “This human touch aligns with our brand and ensures quality across all interactions.”

Write Better Prompts – Adopt a Large Language Model

If you want to learn how to craft effective generative AI prompts or use Salesforce’s Prompt Builder, check out Trailhead, Salesforce’s free online learning platform.

Start learning

Trail: Get Started with Prompts and Prompt Builder