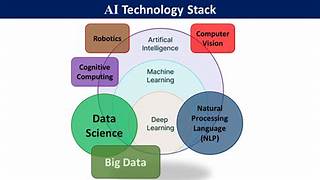

The AI tech stack integrates various tools, libraries, and solutions to enable applications with generative AI capabilities, such as image and text generation. Key components of the AI stack include programming languages, model providers, large language model (LLM) frameworks, vector databases, operational databases, monitoring and evaluation tools, and deployment solutions.

The infrastructure of the AI tech stack leverages both parametric knowledge from foundational models and non-parametric knowledge from information sources (PDFs, databases, search engines) to perform generative AI functions like text and image generation, text summarization, and LLM-powered chat interfaces.

This insight explores each component of the AI tech stack, aiming to provide a comprehensive understanding of the current state of generative AI applications, including the tools and technologies used to develop them.

For those with a technical background, this Tectonic insight includes familiar tools and introduces new players within the AI ecosystem. For those without a technical background, the article serves as a guide to understanding the AI stack, emphasizing its importance across business roles and departments.

Content Overview:

- Brief Overview of the Standard Technology Stack: A look into the layers of traditional technology stacks.

- Discussion of the AI Layer: An introduction to the AI layer within the tech stack.

- Introduction to the AI Stack and Its Components: A detailed look at the various components that make up the AI stack.

- Detailed Definitions of Each Component of the AI Stack: In-depth explanations of the stack’s elements.

- The Shift from AI Research to Scalable Real-World Applications: How AI is transitioning from research to practical, scalable applications.

- Open- vs. Closed-Source Models: An analysis of the impact of choosing between open- and closed-source models on project direction, resource allocation, and ethical considerations.

The AI layer is a vital part of the modern application tech stack, integrating across various layers to enhance software applications. This layer brings intelligence to the stack through capabilities such as data visualization, generative AI (image and text generation), predictive analysis (behavior and trends), and process automation and optimization.

In the backend layer, responsible for orchestrating user requests, the AI layer introduces techniques like semantic routing, which allocates user requests based on the meaning and intent of the tasks, leveraging LLMs.

As the AI layer becomes more effective, it also reduces the roles and responsibilities of the application and data layers, potentially blurring the boundaries between them. Nevertheless, the AI stack is becoming a cornerstone of software development.

Components of the AI Stack in the Generative AI Landscape:

- Programming Language: The language used to develop the components of the stack.

- Model Provider: Organizations offering access to foundational models, often more critical than the specific models themselves due to their reputation and reliability.

- LLM Orchestrator and Framework: Libraries that simplify the integration of AI application components, including tools for prompt creation and LLM conditioning.

- Vector Database: Solutions for storing and managing vector embeddings, crucial for efficient searches.

- Operational Database: Data storage solutions for transactional and operational data.

- Monitoring and Evaluation Tools: Tools that track AI model performance and reliability, providing analytics and alerts.

- Deployment Solution: Services that facilitate the deployment of AI models, including scaling and integration with existing infrastructure.

The emphasis on model providers over specific models highlights the importance of a provider’s ability to update models, offer support, and engage with the community, making switching models as simple as changing a name in the code.

Programming Languages

Programming languages are crucial in shaping the AI stack, influencing the selection of other components and ultimately determining the development of AI applications. The choice of a programming language is especially important for modern AI applications, where security, latency, and maintainability are critical factors.

Among the options for AI application development, Python, JavaScript, and TypeScript (a superset of JavaScript) are the primary choices. Python holds a significant market share among data scientists, machine learning, and AI engineers, largely due to its extensive library support, including TensorFlow, PyTorch, and Scikit-learn. Python’s readable syntax and flexibility, accommodating both simple scripts and full applications with an object-oriented approach, contribute to its popularity.

According to the February 2024 update on the PYPL (PopularitY of Programming Language) Index, Python leads with a 28.11% share, reflecting a growth trend of +0.6% over the previous year. The PYPL Index tracks the popularity of programming languages based on the frequency of tutorial searches on Google, indicating broader usage and interest.

JavaScript, with an 8.57% share in the PYPL Index, has traditionally dominated web application development and is now increasingly used in the AI domain. This is facilitated by libraries and frameworks that integrate AI functionalities into web environments, enabling web developers to leverage AI infrastructure directly. Notable AI frameworks like LlamaIndex and LangChain offer implementations in both Python and JavaScript/TypeScript.

The GitHub 2023 State of Open Source report highlights JavaScript’s dominance among developers, with Python showing a 22.5% year-over-year increase in usage on the platform. This growth reflects Python’s versatility in applications ranging from web development to data-driven systems and machine learning.

The programming language component of the AI stack is more established and predictable compared to other components, with Python and JavaScript/TypeScript solidifying their positions among software engineers, web developers, data scientists, and AI engineers.

Model Providers

Model providers are a pivotal component of the AI stack, offering access to powerful large language models (LLMs) and other AI models. These providers, ranging from small organizations to large corporations, make available various types of models, including embedding models, fine-tuned models, and foundational models, for integration into generative AI applications.

The AI landscape features a wide array of models that support capabilities like predictive analytics, image generation, and text completion. These models can be broadly classified into closed-source and open-source categories.

Closed-Source Models have privatized internal configurations, architectures, and algorithms, which are not shared with the model consumers. Key information about the training processes and data is withheld, limiting the extent to which users can modify the model’s behavior. Access to these models is typically provided through an API or a web interface. Examples of closed-source models include:

- Claude by Anthropic, available via web chat interface and API.

- OpenAI’s GPT-3.5 and GPT-4, as well as embedding models like text-embedding-ada-002, accessible through APIs and chat interfaces.

Open-Source Models offer a different approach, with varying levels of openness regarding their internal architecture, training data, weights, and parameters. These models foster collaboration and transparency in the AI community. The levels of open-source models include:

- Fully Open Source: All aspects, including weights, architecture, and training data, are publicly accessible without restrictions.

- Open Weight: Only the model weights and parameters are available for public use.

- Open Model: Model weights and parameters are available under certain terms of use specified by the creators.

Open-source models democratize access to advanced AI technology, reducing the entry barriers for developers to experiment with niche use cases. Examples of open-source models include:

- LLaMA by Meta, a family of text generation models with varying parameter counts.

- Mixtral-8×7 by Mistral AI.

- Gemma by Google.

- Grok by X (formerly Twitter).

Open-Source vs. Closed-Source Large Language Models (LLMs)

AI engineers and machine learning practitioners often face the critical decision of whether to use open- or closed-source LLMs in their AI stacks. This choice significantly impacts the development process, scalability, ethical considerations, and the application’s utility and commercial flexibility.

Key Considerations for Selecting LLMs and Their Providers

Resource Availability

The decision between open- and closed-source models often hinges on the availability of compute resources and team expertise. Closed-source model providers simplify the development, training, and management of LLMs, albeit at the cost of potentially using consumer data for training or relinquishing control of private data access. Utilizing closed-source models allows teams to focus on other aspects of the stack, such as user interface design and data integrity. In contrast, open-source models offer more control and privacy but require significant resources to fine-tune, maintain, and deploy.

Project Requirements

The scale and technical requirements of a project are crucial in deciding whether to use open- or closed-source LLMs. Large-scale projects, particularly those needing robust technical support and service guarantees, may benefit from closed-source models. However, this reliance comes with the risk of being affected by the provider’s API uptime and availability. Smaller projects or those in the proof-of-concept phase might find open-source LLMs more suitable due to their flexibility and lower cost.

Privacy Requirements

Privacy concerns are paramount, especially regarding sharing sensitive data with closed-source providers. In the era of generative AI, proprietary data is a valuable asset, and some large data repositories are now requiring model providers to agree to AI data licensing contracts. The choice between open- and closed-source models involves balancing access to cutting-edge technology with maintaining data privacy and security.

Other factors to consider include ethical and transparency needs, prediction accuracy, maintenance costs, and overall infrastructure expenses. Performance benchmarks like the Massive Multitask Language Understanding (MMLU) highlight that open-source models are rapidly approaching the performance levels of closed-source models. This trend suggests that the choice between open and closed models may soon become secondary to other evaluative criteria.

Performance and Adoption Trends

The ease of integration often drives the adoption of closed-source models, as they typically require less engineering effort for deployment, being accessible via APIs. However, companies like Hugging Face and Ollama are making it easier to deploy open-source models, offering solutions that reduce the complexity traditionally associated with these models. This accessibility could lead to increased adoption of open-source models, especially as businesses prioritize transparency, data control, and cost-effectiveness.

Even major companies are embracing open-source models. For instance, Google has categorized some of its offerings as “open models,” which require agreement to certain terms of use despite being publicly available. This trend indicates a growing recognition of the value of open-source models in the AI ecosystem.

LLM Frameworks and Orchestrators

LLM frameworks and orchestrators, such as LlamaIndex, LangChain, Haystack, and DSPy, play a crucial role in the AI stack by bridging various components. These tools abstract the complexities involved in developing LLM-powered AI applications, including:

- Connecting vector databases with LLMs

- Implementing prompt engineering techniques

- Integrating multiple data sources with vector databases

- Managing data indexing, chunking, and ingestion processes

These frameworks reduce the amount of code developers need to write, allowing teams to focus on core features and accelerating the development process. However, they are not without challenges, particularly regarding stability, maturity, and frequent updates, which can lead to compatibility issues.

LLM frameworks are essential tools that facilitate the integration of other AI stack components. However, the widespread adoption of these tools can also influence the choice of other components within the stack, potentially leading to standardization or fragmentation based on the frameworks used.

The discussion around LLM frameworks reflects broader trends in the tech stack world, where differing philosophies and approaches can lead to divisions, similar to those seen between Angular and React in web development or TensorFlow and PyTorch in machine learning. This division could also emerge in the AI stack as the field continues to evolve and mature.

DSPy and LangChain: Opinionated LLM Frameworks

DSPy: Systematic LLM Optimization

DSPy (Declarative Self-improving Language Programs, pythonically) is an LLM framework that emphasizes a programmatic and systematic approach to utilizing and tuning LLMs within AI applications. Unlike traditional methods that heavily rely on prompt engineering, DSPy modularizes the entire pipeline. It integrates prompting, fine-tuning, and other components into an optimizer that can be systematically tuned and used as objective metrics to optimize the entire process.

Example: RAG Module Using DSPy

python

Copy code

class RAG(dspy.Module):

def __init__(self, num_passages=3):

super().__init__()

self.retrieve = dspy.Retrieve(k=num_passages)

self.generate_answer = dspy.ChainOfThought(GenerateAnswer)

def forward(self, question):

context = self.retrieve(question).passages

prediction = self.generate_answer(context=context, question=question)

return dspy.Prediction(context=context, answer=prediction.answer)

LangChain: Modular LLM Application Development

LangChain is another widely used LLM framework that employs a declarative implementation, known as LangChain Expressive Language (LCEL), aimed at building production-ready AI applications. LCEL supports modularity and reusability through chain composition, allowing components of the pipeline to be configured with clear interfaces, enabling parallelization and dynamic configuration.

Example: RAG Module Using LangChain

python

Copy code

# Defining the chat prompt

prompt = ChatPromptTemplate.from_template(template)

# Defining the model for chat completion

model = ChatOpenAI(temperature=0, openai_api_key=OPENAI_API_KEY)

# Parse output as a string

parse_output = StrOutputParser()

# Naive RAG chain

naive_rag_chain = (

retrieve

| prompt

| model

| parse_output

)

Vector and Operational Databases

Vector Databases: Efficient Handling of Complex Data

Modern AI applications increasingly require vector databases due to the growing complexity of data types like images, large text corpora, and audio. Vector embeddings, which are high-dimensional numerical representations of data, are used to capture the context and semantics of raw data. These embeddings facilitate tasks like recommendation systems, chatbots, and Retrieve-And-Generate (RAG) applications by enabling context-based searches.

Vector databases specialize in storing, indexing, managing, and retrieving vector embeddings, optimizing for operations on high-dimensional vectors using algorithms like HNSW (Hierarchical Navigable Small World). The integration of vector databases with other AI stack components is crucial for maintaining seamless data flow and system efficiency.

Operational Databases: Managing Data Complexity

Operational databases are essential for managing transactional, metadata, and user-specific data within AI applications. They provide high throughput, efficient transactional data operations, scalability, and data workflow management capabilities. Combining vector and operational databases can streamline data management, eliminating silos and enhancing system efficiency.

MongoDB: A Dual Role in AI Stacks

MongoDB serves as both a vector and operational database, supporting complex data storage needs, real-time processing, and vector search capabilities. Its infrastructure, including Atlas Search and Vector Search, provides a unified platform for managing diverse data types and applications.

Monitoring, Evaluation, and Observability

LLM System Observability and Monitoring

Monitoring and evaluating LLM systems are crucial as AI applications transition from proof-of-concept (POC) to production. This involves assessing LLM performance, managing inference costs, and optimizing prompt engineering techniques to maintain output quality while reducing token usage.

Tools for LLM Observability

Tools like PromptLayer, Galileo, Arize, and Weights & Biases provide insights into the operational performance of LLMs, including output quality, system latency, and integration effectiveness. These tools help manage the unpredictability of LLM outputs, especially as the field of generative AI evolves.

Conclusion

As AI technology and applications continue to mature, integrating robust frameworks, databases, and monitoring systems becomes increasingly vital. The choices made in these areas significantly impact the efficiency, scalability, and overall success of AI implementations.

LLM Evaluation: Understanding and Addressing Unpredictable Behaviors

What is LLM Evaluation?

LLM evaluation involves systematically and rigorously testing the performance and reliability of language models. This process is crucial for identifying and mitigating issues like hallucinations—where models generate incorrect or misleading information. Evaluation methods include:

- Benchmarking: Testing model outputs against standard datasets to assess quality.

- Human Feedback: Using human evaluators to gauge the relevance and coherence of outputs.

- Adversarial Testing: Identifying vulnerabilities in models through targeted challenges.

Reducing hallucinations remains an active research area, with ongoing efforts to detect and manage these inaccuracies before deploying systems.

LLM Hallucination Index

The LLM Hallucination Index, developed by Galileo, evaluates language model hallucinations across tasks like Q&A, RAG (Retrieve and Generate), and long-form text generation. It uses metrics such as Correctness and Context Adhesion to rank and evaluate hallucinations.

The Role of Monitoring and Deployment in AI Applications

Monitoring and Observability

LLM system observability involves tracking the operational performance of LLMs, including output quality, latency, and integration effectiveness. Tools like PromptLayer, Galileo, Arize, and Weights & Biases help developers monitor these metrics, crucial for maintaining performance as applications scale from POC to production.

Deployment Solutions

Major cloud providers and newer platforms facilitate the deployment of AI models:

- Google Vertex AI: Provides tools for model deployment, including AutoML and custom model support.

- Amazon SageMaker Inference: Offers scalable environments for real-time and batch processing.

- Microsoft Azure: Ensures secure and scalable deployment with integrated monitoring tools.

Emerging platforms like Hugging Face and LangServe offer specialized services for deploying transformer-based models and LLM applications.

Simplified Deployment for Demos

Tools like Gradio and Streamlit make it easy to create interactive web demos for LLM applications, facilitating rapid prototyping and demonstration.

The Evolving AI Stack: Collaboration and Competition

The AI stack is rapidly evolving, with components increasingly overlapping in functionality. For example, LangChain and LlamaIndex offer solutions across monitoring, deployment, and data management, integrating closely with databases and LLM frameworks. This trend towards a more integrated and comprehensive stack simplifies development but also increases competition among tools and platforms.

Future Directions

As generative AI applications mature, additional tools focused on cost reduction, efficiency, and broader functionality are likely to emerge. This includes efforts to extend context windows in LLMs and integrate sophisticated data ingestion and embedding processes, potentially disrupting current architectures like RAG.

Conclusion

The AI stack’s dynamic nature offers both opportunities and challenges, with tools and platforms continuously adapting to meet the needs of developers. For those building in this space, programs like MongoDB’s AI Innovators provide valuable support and resources. Engaging with the AI community can offer insights and collaboration opportunities as the landscape evolves.

Stay Updated

To stay informed about developments in the AI stack, consider following industry experts and subscribing to platforms like MongoDB on Medium.

FAQs

- What is an AI stack? An AI stack encompasses the tools, libraries, and solutions used to build applications with generative AI capabilities, including programming languages, model providers, LLM frameworks, and more.

- How does the choice between open-source and closed-source models affect AI projects? The choice impacts development processes, scalability, and ethical considerations, influencing factors like transparency, control, and cost.

- What role do programming languages play in the AI stack? Programming languages determine component selection and application architecture, with Python, JavaScript, and TypeScript being prominent choices.

- How do LLM frameworks simplify AI application development? Frameworks like LlamaIndex and LangChain abstract complex processes, facilitating connections between data sources and LLMs, and implementing prompt engineering techniques.

- Why is MongoDB popular for AI applications? MongoDB offers robust data management and search capabilities, efficiently handling unstructured data and integrating well with LLM orchestrators and frameworks.